Home >>Operating System Tutorial >Operating System Memory Management

Operating System Memory Management

Operating System Memory Management

Memory management is the feature of an operating system that handles or controls primary memory, which during execution transfers processes back and forth between main memory and disc. Memory management maintains track of of and every memory location, irrespective of whether it's assigned to any method or free. It checks how much memory to allocate to processes. It decides at what time which process will get the memory. It tracks whenever a certain memory is released or unallocated and updates the status accordingly.

This tutorial will teach you basic Memory Management concepts.

Process Address Space

Process address space is the collection of logical addresses referenced by a process in its code. For example, when 32-bit addressing is in use, addresses can range from 0 to 0x7fffffff; that is, 2^31 possible numbers, for a total theoretical size of 2 gigabytes.

At the time of memory allocation to the application, the operating system takes care of mapping the logical addresses to physical addresses. There are three types of addresses used before and after memory is allocated in a programme –

| Sr | Memory Addresses | Description |

|---|---|---|

| 1 | Symbolic addresses | The addresses that are used in source code. The variable names, constants, and labels of instructions are the fundamental elements of the symbolic address space. |

| 2 | Relative addresses | A compiler transforms symbolic addresses to relative addresses at compilation time. |

| 3 | Physical addresses | Physical addresses At the time a programme is loaded into main memory the loader produces these addresses. |

In compile-time and load-time address-binding schemes, the virtual and physical addresses are the same. Virtual and physical addresses vary in address-binding scheme for execution-time.

The set of all the logical addresses a programme produces is called a logical address space. The set of all physical addresses that correspond to those logical addresses is called a physical address space.

The Memory Management Unit (MMU), which is a hardware computer, performs the runtime mapping from virtual to physical address. MMU converts virtual address to physical address using the following method.

- The value in the base register is applied to any user process created address, which is viewed as offset at the time it is sent to memory. For instance, if the base register value is 10000, then the user's attempt to use address location 100 will be dynamically reassigned to location 10100.

- Virtual addresses are dealt with by the program code; the actual physical addresses are never used.

Static vs Dynamic Loading

The choice between static or dynamic loading shall be made at the time of creation of the computer programme. If you need to load your software statically, the full programmes will be compiled and linked at the time of compilation, without leaving any external programme or module dependency. The linker integrates the object programme into an absolute programme with other necessary object modules, which often includes logical addresses.

If you write a dynamically loaded programme, the programme will be compiled by your compiler and only references will be provided for all the modules you want to dynamically use, and the rest of the work will be performed at the time of execution.

When loading, the absolute programme (and data) is loaded into memory with static loading, in order to start execution.

If you use dynamic loading, the library's dynamic routines are stored on a disk in relocatable form and loaded to memory only when the programme needs them.

Static vs Dynamic Linking

As mentioned above, by using static linking, the linker combines all other modules that a programme needs into a single executable programme to avoid any dependency on runtime.

When using dynamic linking, the actual module or library does not need to be connected to the programme, but rather a reference to the dynamic module is given when compiling and linking. In Windows, Dynamic Link Libraries (DLL), and Unix Shared Objects are strong examples of dynamic libraries.

Swapping

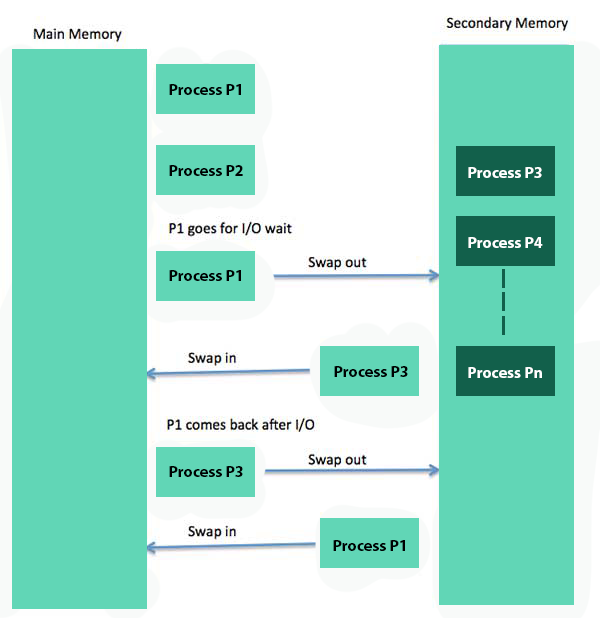

Swapping is a method for temporarily switching a process from the primary memory (or moving) to the secondary storage (disc) and making that memory usable for other processes. Sometime later, the system Swaps the operation back from the secondary storage to the main memory.

Although performance is typically affected by the process of swapping, it helps to run several and large parallel processes, and that's why Swapping is also known as a memory compaction technique.

The total time taken by the process of copying includes the time it takes to transfer the whole process to a secondary disk and then to copy the file back to memory, as well as the time it takes to regain the main memory.

Let us presume that the user process is 2048 KB in size and has a data transfer rate of about 1 MB per second on a typical hard disk where swapping can take place. It will require the actual transfer of the 1000 K process to or from memory

2048KB / 1024KB per second = 2 seconds = 2000 milliseconds

Now given the time in and out, it will take full 4000 milliseconds plus other overhead where the process competes to recover the main memory back.

Memory Allocation

Typically the main memory has two partitions-

- Low Memory − This memory resides in the operating system.

- High Memory − Processes for users are stored in high memory.

The operating system uses the following method for allocating the memory.

| Sr | Memory Allocation | Description |

|---|---|---|

| 1 | Single-partition allocation | Relocation-register scheme is used in this type of allocation to shield user processes from each other, and from changing operating system code and data. Relocation register contains the lowest physical address value, while limit register contains a number of logical addresses. Any logical address must be less than the register of limitations. |

| 2 | Multiple-partition allocation | In this type of allocation, the main memory is split into a number of partitions of fixed size, where each partition should contain only one operation. A process is selected from the input queue when a partition is free, and loaded into the free partition. The partition becomes available for another process until the process terminates. |

Fragmentation

When processes are loaded and removed from memory, the free space in the memory is broken into small pieces. It occurs when processes can't be assigned to memory blocks often despite their limited size, and memory blocks stay unused. This is known as Fragmentation.

Fragmentation is of two different types-

| Sr | Fragmentation | Description |

|---|---|---|

| 1 | External fragmentation | Complete memory space is adequate to fulfil a request or reside in a process, but is not contiguous, so it can not be used. |

| 2 | Internal fragmentation | The block of memory assigned to the method is bigger. Any portion of memory is left unused, since other method can not access it. |

External fragmentation can be reduced to put all free memory in one wide block by compacting or wobbling memory contents together. The relocation should be dynamic for making compaction feasible.

Internal fragmentation can be minimised by assigning the smallest partition efficiently but large enough for the process.

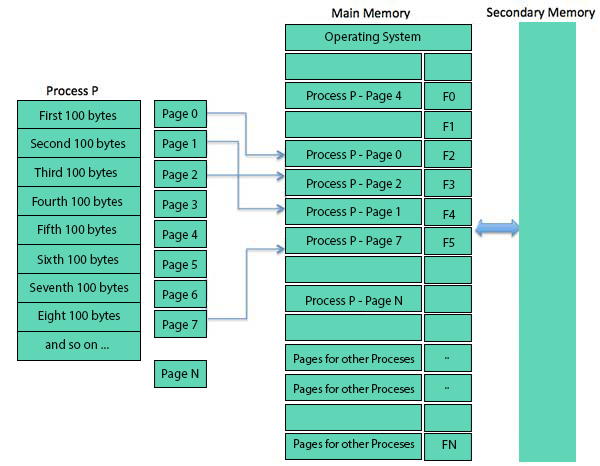

Paging

A computer can address more of the memory than the physically installed sum on the device. This extra memory is simply called virtual memory and it's a hard portion that's set up to simulate the RAM of your computer. Paging technique plays an important part in virtual memory implementation.

Paging is a memory management strategy in which process address space is broken into pages (size is power of 2, between 512 bytes and 8192 bytes) of the same size blocks. Process size is determined by the number of pages.

Similarly, the main memory is divided into small fixed-sized blocks of (physical) memory called frames and the size of a frame is kept the same as that of a page so that the main memory is used optimally and unnecessary fragmentation avoided.

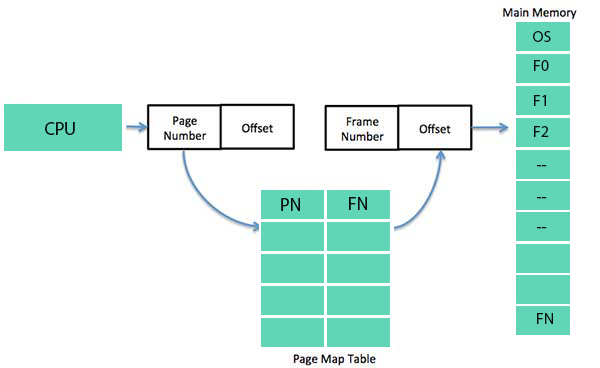

Address Translation

Page address is called logical address by page number and offset.

Logical Address = Page number + page offset

Frame address is physical address, which is represented by frame number which offset.

Physical Address = Frame number + page offset

To keep track of the relationship between a page of a process to a frame in physical memory, a data structure called page map table is used.

When allocating a frame to any page, the system converts this logical address into a physical address and creates entry into the page table to be used in programme execution.

When executing a process its corresponding pages will be loaded into any available memory frames. Suppose you have an 8Kb programme but at a given point in time your memory can handle only 5Kb, then the paging principle is pictured. When a computer runs out of RAM, the operating system (OS) transfers unused or unnecessary memory pages into secondary memory to free up RAM for other processes and carry them back to the programme when needed.

This process continues during programme execution, where the OS continues to erase idle pages from the main memory and write them to the secondary memory and put them back when the programme needs.

Advantages and Disadvantages of Paging

Here's a list of the benefits and drawbacks of paging

- Paging reduces external fragmentation, but also has internal fragmentation.

- Paging is easy to implement and believed to be an effective strategy for memory management.

- Since the pages and frames are of equal size, swapping becomes very easy.

- Page table requires extra memory space, so a system with limited RAM may not be good for that.

Segmentation

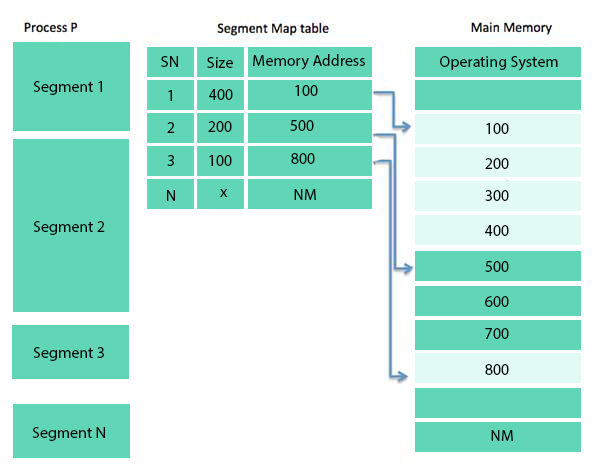

Segmentation is a technique of memory management where each job is divided into many segments of different sizes, one for each module that contains pieces performing similar functions. In reality, each segment is a different logical address space within the programme.

When executing a process, the corresponding segmentation will be loaded into non-contiguous memory, but each segment will be loaded into a contiguous block of available memory.

Segmentation memory management functions very similar to paging except here segments are of variable-length where they are of fixed size as in paging pages.

A segment of the programme includes the key function of the programme, utility functions, data structures etc. The operating system maintains a segment map table in the main memory for each process and a list of free memory blocks along with segment numbers, their size and corresponding memory locations. The table stores segment starting address and segment length for each segment. A memory location reference includes a value which identifies both a segment and an offset.